What is the Agent's Blast Radius?

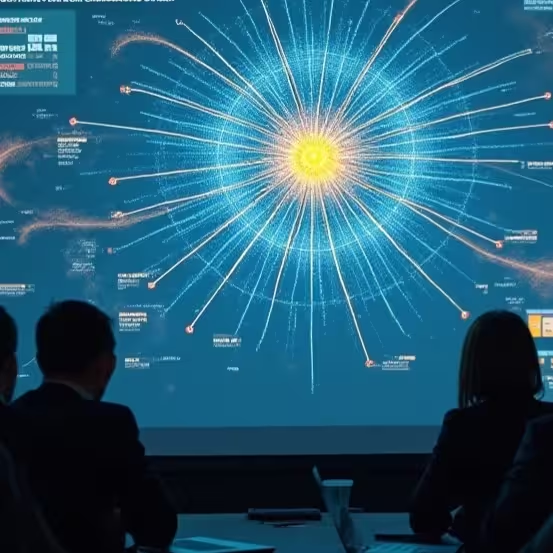

An agent's true power isn't its static permissions; it's the Cumulative Operational Authority it can assemble at runtime. We call this total potential impact the Blast Radius.

Our platform is the first to programmatically calculate this metric, giving you a concrete, auditable, and machine-verifiable measure of an agent's potential for harm. We calculate multiple variants:

- Static Blast Radius: The agent's total scope before any runtime invocation.

- Dynamic Blast Radius: The actual, expanded scope during a specific session, including delegated authority.

- Maximum Potential Blast Radius: The theoretical upper bound of risk, accounting for all possible permission delegations.

By simulating the Blast Radius of a proposed change, you can understand its true impact before you ever commit code.